Artificial Intelligence (AI) has made significant strides in education, offering innovative tools and solutions to enhance learning. However, as AI continues to infiltrate classrooms, it’s crucial to be aware of the potential dangers it poses in school settings.

One of the primary concerns is the breaching of privacy. AI systems often collect vast amounts of sensitive data on students, including their academic performance, behavior patterns, and even personal information. Without strict safeguards in place, this data could be vulnerable to breaches, potentially leading to identity theft or other forms of cyber exploitation.

In addition to these concerns, there’s a growing worry about the erosion of critical thinking skills in the presence of AI. As AI systems become more sophisticated in providing instant answers and solutions, students might become passive consumers of information rather than active seekers of knowledge. This could hinder their ability to analyze, evaluate, and apply information in real-world contexts, a skill set that is crucial for lifelong learning and success.

Furthermore, there’s the risk of over-reliance on AI, which might stifle critical thinking and creativity. Relying heavily on AI-driven teaching methods could discourage students from developing essential problem-solving skills, as they may grow accustomed to receiving quick answers and solutions. The use of AI limits students from reaching their full potential and promotes laziness instead of growth.

Another significant danger lies in the potential for AI to increase educational inequalities. While some schools and institutions may have access to cutting-edge AI-powered tools, others may be left behind due to budget constraints or lack of technical infrastructure. This digital divide could widen the gap between privileged and underserved communities, leaving the latter at a further disadvantage in an already competitive educational landscape.

Moreover, there’s the potential for a loss of human touch in education. While AI can provide valuable insights, it cannot replace the nuanced understanding and empathy that human educators bring to the table. Students need emotional support, mentorship, and personalized guidance that only human teachers can provide.

Lastly, there’s the concern of job displacement for educators. As AI becomes more integrated into classrooms, there may be a temptation to replace human teachers with automated systems. While AI can enhance the learning experience, it cannot replace the complex and dynamic interactions that occur between students and teachers. The loss of teaching jobs could have significant economic and social implications, affecting not only educators but also the communities they serve.

In conclusion, while AI holds immense potential to revolutionize education, it is essential to approach its integration with caution and a keen awareness of potential dangers. Striking a balance between innovation and ethical considerations is crucial to ensure that AI enhances education without compromising the well-being and privacy of students.

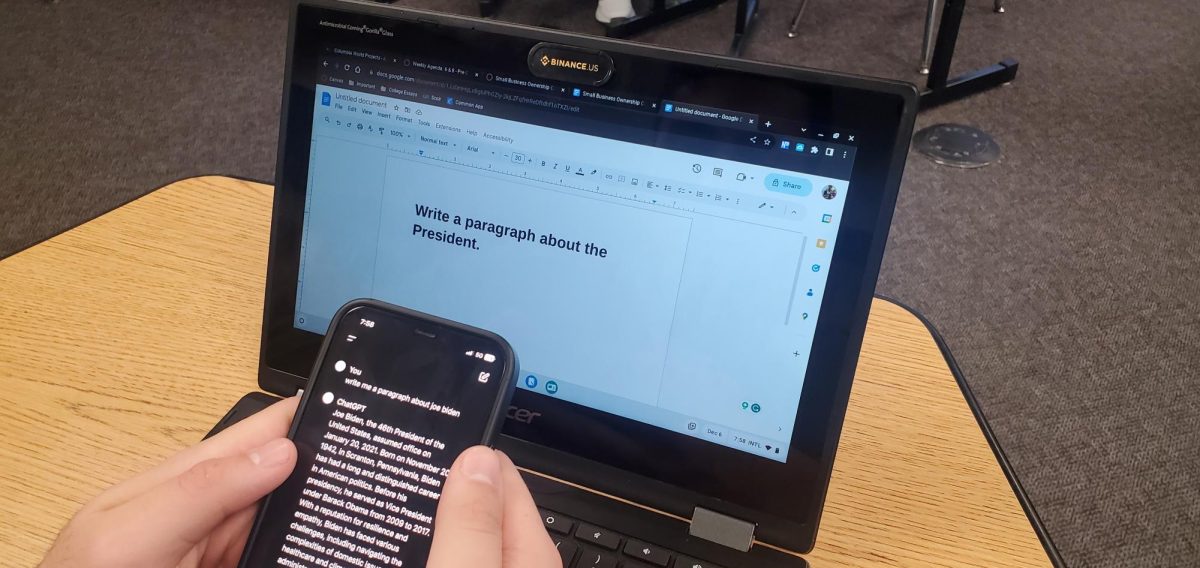

I did not write this article.

I typed a prompt into ChatGPT and it ‘wrote’ this entire article and created a title. It only took about 30 seconds to think of a good prompt, type it in, and copy and paste it into a fresh document. With very little adjustments to word choice, I made this article sound like a human actually wrote it.

The machine raises some fair points: utilizing AI in classrooms will cause children to rely on getting answers quickly rather than completing the work for themselves. Students would benefit from a human teacher who could readily explain concepts to kids in a way for them to understand.

It is scary that a machine is able to pull information from numerous sources and mash them all together to create an article so similar to human writing. However, in this article, there are no real life human additions.

There are no quotes, no opinions, and no student voices. My advisor told me that if I were to hand it in, he would have just given it back because of this. To any students who are using AI for their work, think twice about handing it in; teachers will know if you wrote it or not.

Willa Magland • Dec 7, 2023 at 8:15 am

You fooled me! I think there’s a lot of blurring of what these recent LLMs are and aren’t good for. Being language models, they are trained to replicate human speech, and therefore come with many of the flaws and biases we have. I think the implementation of them has made them appear to be sources of information, such as the integration of ML in the Bing search engine, an information resource, which often serves inaccurate information. We’ll see how it all turns out.